Eliezer Yudkowsky

Submission 5,756

Part of a series on AI / Artificial Intelligence. [View Related Entries]

Eliezer Yudkowsky

Part of a series on AI / Artificial Intelligence. [View Related Entries]

[View Related Sub-entries]

This submission is currently being researched & evaluated!

You can help confirm this entry by contributing facts, media, and other evidence of notability and mutation.

| Navigation |

| About • Career • Online History • Related Memes • Various Examples • Search Interest • External References • Recent Images • Recent Videos |

About

Eliezer Yudkowsky is a researcher and writer known for his work in the field of artificial intelligence research. He is notable for popularizing the idea that AI should be taught to be benign and useful toward humans (called "artificial general intelligence" or "AGI") from the start in order to avoid a scenario in which they explode in intelligence and begin to pose a threat to humanity's well-being. Yudkowsky is also known for starting the Machine Intelligence Research Institute (MIRI) and the LessWrong internet forums.

Career

Eliezer Yudkowsky did not attend high school or college.[1] Between 1998 and 2000, he wrote "Notes on the Design of Self-Enhancing Intelligence,"[2] "Plan to Singularity"[3] and revised "Notes on the Design" into "Coding a Transhuman AI."[4] In the year 2000, the "Singularity Institute," now known as MIRI was created.[5] Yudkowsky ran the Singularity Institute alone until 2008, with all its publication bearing his name, and with him being listed as its sole employee.[6][7] Yudkowsky has also worked closely with Nick Bostrom, writer of the Paperclip Maximizer theory about AI Alignment.

On February 28th, 2010, Yudkowsky published a Harry Potter fanfiction to FanFiction.net.[9] The work was titled "Harry Potter and the Methods of Rationality" and followed a world in which the titular character was raised by a biochemist and grew up reading science fiction. The approximately 660,000-word-long book has been lauded by fans of both Harry Potter and Yudkowsky,[11] with the latter claiming the work to be his method of trying to teach rationality to the masses.[10]

Online History

In 2008, Yudkowsky started the community blog LessWrong, a forum focused on discussions of philosophy, rationality, economics and artificial intelligence, among other topics. The blog is responsible for the genesis of Roko's Basilisk and contains extensive writing on the Waluigi Effect.

On May 5th, 2016, LessWrong founder Eliezer Yudkowsky gave a talk at Stanford University titled "AI Alignment: Why It's Hard and Where to Start." On December 28th, 2016, the Machine Intelligence Research Institute YouTube[8] channel uploaded a recording of the talk, which gathered more than 67,000 views over the next six years.

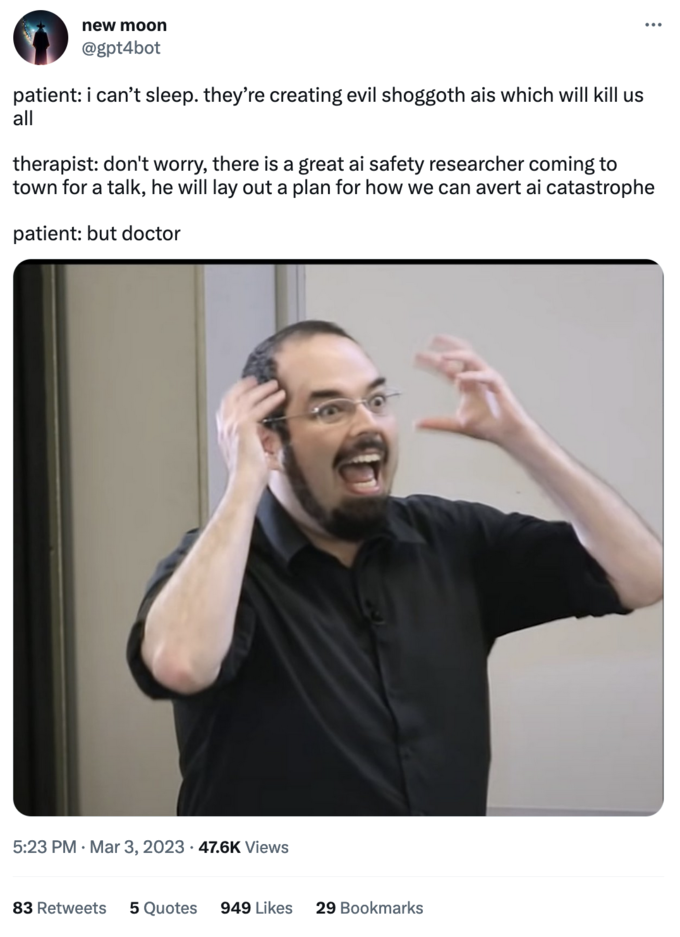

At the 1:11:21 timestamp on the aforementioned video, Yudkowsky says, "aaahh!" and makes a scared face while gesturing his hands by his head. A screen capture of this scene has since become a prevalent reaction image used in memes on Twitter amongst fans of his work.

Related Memes

Yudkowsky Screaming

On November 22nd, 2022, Twitter[12] user @SpacedOutMatt posted a tweet using what he called the yudkowsky_screaming.jpg, gathering over 290 likes in over four months (seen below, left). On November 28th, Twitter[13] user @goth600 posted a Yudkowsky Screaming meme as well, alongside a caption that read, "Mfw the shrimp start downloading the Diplomacy weights through Starlink through tor." The post gathered over 40 likes in over four months (seen below, right).

Various Examples

Search Interest

Unavailable.

External References

[1] CNBC – 5 Minutes With Yudkowsky

[2] Internet Archive – Yudkowsky

[3] Internet Archive – Yudkowsky

[4] Internet Archive – Yudkowsky

[5] Internet Archive – SingInst.Org

[6] New Yorker – Doomsday Invention

[7] Machine Intelligence Research Institute – All Publications

[8] YouTube – Machine Intelligence Research Institute

[9] FanFiction – Harry Potter and the Methods of Rationality

[10] Facebook – Eliezer Yudkowsky

[11] Internet Archive – Top 10 harry Potter Fanfictions

[12] Twitter – SpacedOutMatt

Recent Videos 1 total

Recent Images 12 total

Share Pin

Comments ( 5 )

Sorry, but you must activate your account to post a comment.

Please check your email for your activation code.