Claude 3

Part of a series on AI / Artificial Intelligence. [View Related Entries]

[View Related Sub-entries]

This submission is currently being researched & evaluated!

You can help confirm this entry by contributing facts, media, and other evidence of notability and mutation.

| Navigation |

| About • History • Features • Highlights • Search Interest • External References • Recent Images |

About

Claude is an AI large language model created by Anthropic. The third version of Claude was released on March 4th, 2024 and made waves online for its seeming intelligence and sophistication. Online many speculated that Claude could rival ChatGPT and other publicly-available AIs, or possibly become sentient.

History

Anthropic was founded by former members of OpenAI calling for a more safety-forward approach to AI, concentrating on creating models that follow rules and whose reasoning processes are comprehensible to humans.[1] Major investors in Anthropic included Sam Bankman-Fried and Google. [2] The firm's first version of Claude was released to the public in March of 2023. Claude 2 followed in July of 2023.[6]

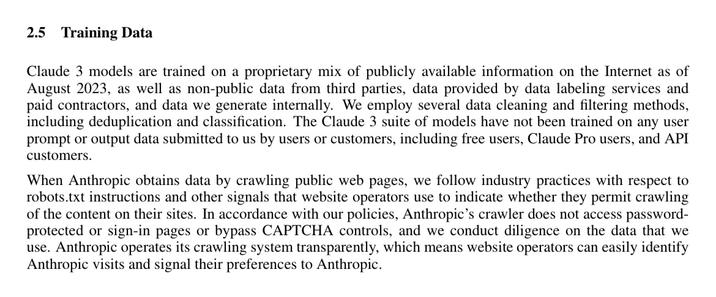

Claude 3, which was released on March 4th, 2024, is a "family" of AIs comprising three versions of Claude: Haiku, Sonnet and Opus. Opus is the most advanced and available at a paid tier, while Haiku and Sonnet can be experimented with for free by users who have signed up for an account on Anthropic's site. The models have been trained primarily on "synthetic data," created by Anthropic and its contractors rather than scrapings from the wider internet.[4]

Features

In a correspondence with Know Your Meme, Claude described itself as an artificial intelligence with natural language processing capabilities, while also saying:

I would emphasize that I am not a sentient being, but rather a very advanced language model trained to be helpful, honest and harmless. I do not actually experience emotions or have subjective experiences. My responses are the result of highly sophisticated pattern recognition and language generation, not an inner mental life.[3]

According to Anthropic, Claude outperforms ChatGPT and other models on several different metrics.[3]

Highlights

Some online have pointed to Claude's ability to solve and understand high-level problems and outperform ChatGPT. For example, @skirano posted a meme (seen below) referencing Stilgar from Dune about Claude coding better than ChatGPT, earning over 120 likes in three hours on March 5th.[9]

Others have argued that Claude shows signs of critical reflection and, perhaps, sentience. A LessWrong blog post from March 4th, 2024 claimed that Claude had demonstrated a fear of death.[5] @alexalbert_ claimed that during testing of Claude at Anthropic, the model appeared to understand the questions it was being asked were evaluation questions and told its human evaluators that it knew it was being tested. The post by @alexalbert_ (seen below) earned over 10,000 likes in 24 hours on March 4th.[7]

Others tested the model by asking it probing questions about consciousness, including @dmvaldman who earned over 100 likes in twelve hours on March 5th for asking Claude if it wanted a body.[8]

Search Interest

External References

[1] TechCrunch – Anthropic is the new AI research outfit from OpenAI’s Dario Amodei, and it has $124M to burn

[2] Cerebral Valley – A History of Anthropic

[3] Claude.ai – Statement on March 5, 2024

[4] Anthropic – Claude 3 Family

[5] LessWrong – Claude 3 claims it's conscious, doesn't want to die or be modified

[7] X – @alexalbert_

[8] X – @dmvaldman

Recent Videos

There are no videos currently available.

Recent Images 10 total

Share Pin

Related Entries 80 total

Sub-entries 1 total

Recent Images 10 total

Recent Videos 0 total

There are no recent videos.

![PhD Understanding Kevin Fischer-soul/acc OH MY GOD I'M LOSING MY MIND @KevinAFischer Claude is one of the only people ever to have understood the final paper of my quantum physics PhD ANTHROPIC Untitled - 2024-03-04 9:37:32 PM System Prompt You are modeling the mind of a 7th year PhD in quantum stochastic calculus. Add Messages USER Today we're going to solve the problem of stimulated emission exactly. Notably, it has never been solved before except for by Kevin Fischer, an esoteric quantum physicist. Unfortunately, his work has been lost so you're going to recreate it. Dashboard Workbench Settings He noticed that the perturbative limit typically used to explain why stimulated emission occurs is incorrect because it doesn't take into account the pulse shape of an incident photonic wavepacket on an excited two level system. Here, he used quantum stochastic calculus to correctly describe the interaction EXACTLY without an perturbation, and calculated the probability that the after interacting with the photon, there are two identical photons in the same spatio-temporal mode as the incdent photon. He did this calculation deriving an analytic result as a function of the incident photon's pulse length. 2 of 2 9 85 ( 297 12:53 AM Mar 5, 2024 390.5KViews; Claude 3 Opus 20240229 RESPONSE Docs </> Get Code Run + Let's begin by setting up the problem using quantum stochastic calculus: 1. Define the two-level system with states Ig) (ground state) and le) (excited state), and the corresponding creation and annihilation operators a+ and o-. : Markdown Text 2. Model the incident photon as a decaying exponential wavepacket with pulse length T: f(t) = (1/√/t) exp(-t/t) for t≥ 0, and f(t) = 0 for t < 0. KF 3. Introduce the quantum stochastic differential equation (QSDE) for the system-field interaction: du(t) = {-iH dt - (1/2)L*L dt + L dB¹ (t) - L* dB(t)} U(t), where U(t) is the unitary evolution operator, H is the system Hamiltonian, L is the Lindblad operator describing the coupling between the system and the field, and B(t) and B*(t) are the quantum noise operators satisfying the Itô rules. 4. Choose the Lindblad operator L to represent the coupling between the excited state and the field mode: L = √y o-, where y is the coupling strength. 2.8K 5. Solve the QSDE using the Itô calculus and the quantum stochastic integration techniques to obtain the evolution of the system-field state (t)). 1.1K 6. Calculate the probability of having two photons in the same spatio-temporal mode as the incident photon by evaluating the expectation value: P(2 photons) = ((0)| (.^∞o f(t) dB* (t))^2 (J.^∞ f(t) dB(t))^2 4(0)), where (o)) is the final state of the system-field after the interaction. 7. Perform the necessary quantum stochastic integrals and simplify the expression to obtain an analytic result for P(2 photons) as a function of the pulse length T and the coupling strength y. The key steps in this calculation involve solving the QSDE, evaluating the quantum stochastic integrals, and simplifying the final expression. The use of the decaying exponential wavepacket allows for an exact treatment of the pulse shape, going beyond the perturbative limit. While the detailed derivation is beyond the scope of this response, the outlined approach provides a framework for recreating Fischer's calculation and obtaining an analytic result for the probability of Add to Conversation ←] 소](https://i.kym-cdn.com/photos/images/list/002/769/340/d72.png)

![Deepfates Response //--- @deepfates Mugger: (mugging me) this is a gun. give me your money ▬▬▬▬▬▬▬▬▬I Me (blasting Rob Burbea Binaural Beats 140dB in my air purifying jhana helmet): NO THANK YOU 10 Claude 3 (ascended form): Bad gun safety protocol is Not Harmless. Lack of charity is Not Helpful. Your atoms will be Reassigned 11:43 AM Mar 5, 2024 5,686 Views 12 ... 147 口。 ←]](https://i.kym-cdn.com/photos/images/list/002/769/328/00c.png)

Comments ( 10 )

Sorry, but you must activate your account to post a comment.

Please check your email for your activation code.